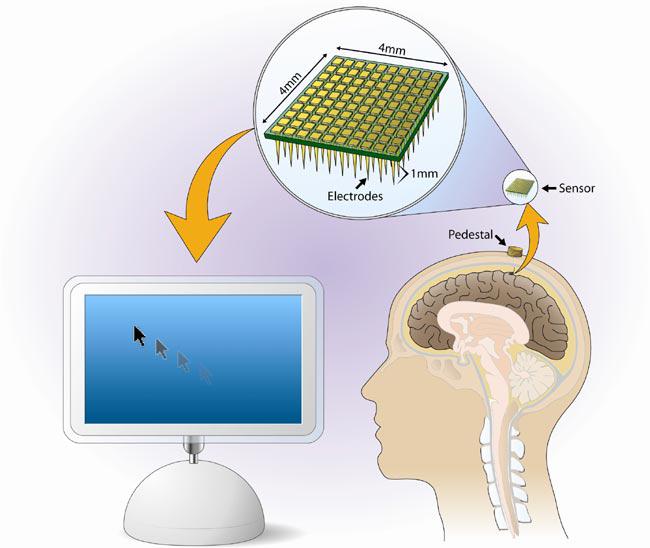

What a brain-controlled computer might feel like

In the main video embedded here, the protagonist is getting used to his new brain-reading gadget, which acts kind of like Siri or Alexa, just inside his mind. At times, it offers him superpowers. Just with his thoughts, he can ask his computer to remember an inspiring passage he read last night. And when he sits down to work, he generates a 3D model in mere seconds by thinking every button and adjustment he wants to use next in a rapid-fire editing sequence. But in another moment, his phone starts sending his brain notifications, and instead of just flooding his screen, they flood his mind like a wave of advertisements that he cannot mute (until he mentally shouts for them to be silenced). The video makes it clear that, for brain interfaces to actually arrive, we need far better consumer standards than what we’re accustomed to today with our phones.

Most of the interfaces in the video are the same as any other spoken interface, just in your head. That comes from real research examining the capabilities of BCI systems. Researchers are beginning to read the words people say in their heads, even if what we consider thought is so much richer than our inner monologue.

What if we don’t want to talk? Another rich modality within BCI could be thinking kinesthetically, and actually imagining physical movements that the computer can read. Of course, training your brain to communicate through imagined physical actions is, pardon the pun, a heady idea. So Card79 imagines an accompanying app that would have you picture your hand drawing letters of the alphabet. This idea is also based upon active research.